03: Training data-efficient image transformers & distillation through attention (DeiT )

Computer Vision

Transformer

Knowledge Distillation

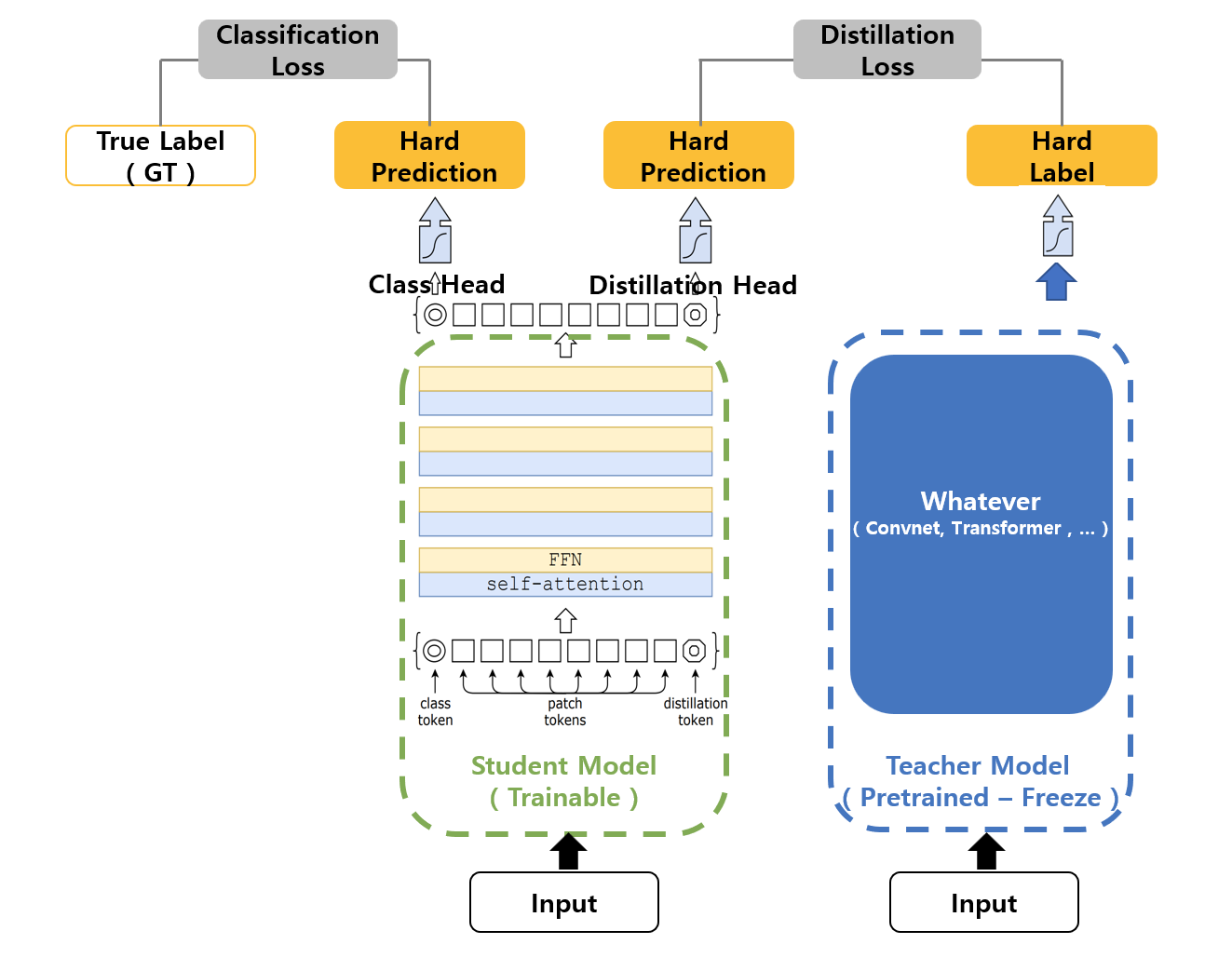

Training data-efficient image transformers & distillation through attention(DeiT)提出了一种通过知识蒸馏(distillation token 与 attention-based distillation)显著提升 Vision Transformer 数据效率的方法,使 ViT 能在中小规模数据集上高效训练并达到与 CNN 可比的性能。

On this page